Unveiling the Art: How to Train a Stable Diffusion Model?

Stable Diffusion Models, a groundbreaking concept in machine learning, have garnered immense attention for their ability to generate high-quality images.

In this comprehensive guide, we’ll unravel the intricacies of training Stable Diffusion Models, ensuring you’re equipped to harness their power for image generation.

Also read: BRICS: What Countries Could be Included in Next Expansion?

The Prelude: Understanding Stable Diffusion Models

Before we delve into the nitty-gritty of training, let’s grasp the essence of Stable Diffusion Models.

Positioned at deep learning and image generation, these models operate on the principle of iteratively refining images through a diffusion process.

The result? Striking images that exhibit a fine balance between realism and creativity.

The Blueprint: Training Stable Diffusion Models

1. Setting the Stage: Google Colab as Your Arena

Google Colab stands as a formidable platform for training Stable Diffusion Models.

Its cloud-based nature and provision of free GPU resources make it an ideal environment for diving into the complexities of deep learning without the burden of hardware constraints.

Also read: Saudi Arabia Yet to Officially Join BRICS

2. Loading the Arsenal: Images as the Training Ground

The heart of training Stable Diffusion Models lies in the choice of training images.

These serve as the raw material that the model processes and refines during training.

Additionally, ensure a diverse and representative dataset to allow the model to learn the nuances of different visual elements.

The Symphony of Training: Steps in the Process

1. Initiating the Dance: Training the Base Model

The journey begins by training the base Stable Diffusion Model.

This involves exposing the model to the selected training images and allowing it to traverse the diffusion process. The goal at this stage is not perfection but laying the foundation for subsequent fine-tuning.

2. Tuning the Orchestra: Fine-Tuning for Precision

Fine tuning is the secret sauce in the Stable Diffusion Model symphony. Once the base model has danced through the initial training, fine-tuning steps in to refine the model’s understanding.

Furthermore, it involves a delicate process of adjusting parameters and optimizing the model for the specific nuances of your chosen dataset.

3. The Art of Noise: Introducing Randomness

An integral element in training Stable Diffusion Models is the introduction of random noise. This acts as a catalyst in the diffusion process, injecting an element of unpredictability and creativity.

The model learns to navigate and enhance images while embracing the inherent randomness of the creative process.

Mastering the Choreography: Best Practices for Training

1. Patience as a Virtue: Understanding the Time Investment

Remember, this is not a sprint; it’s a marathon. The intricacies of the diffusion process and fine-tuning demand time and computational resources.

Exercise patience and allow the model the time it needs to evolve into a creative powerhouse.

2. Model Design Matters: Crafting Your Artisanal Approach

The architecture of your Stable Diffusion Model is akin to an artisanal recipe.

Experiment with different neural network architectures, tweaking and refining until you find the perfect blend for your specific use case. Model design is the craft that transforms training into an art.

3. Validation: Ensuring Quality in the Creative Process

Validation is the checkpoint that ensures the quality of your trained model.

Allocate a portion of your dataset for validation, allowing you to gauge the model’s performance on images it hasn’t encountered during training.

Additionally, this step is crucial for avoiding overfitting and ensuring the generalization of your model.

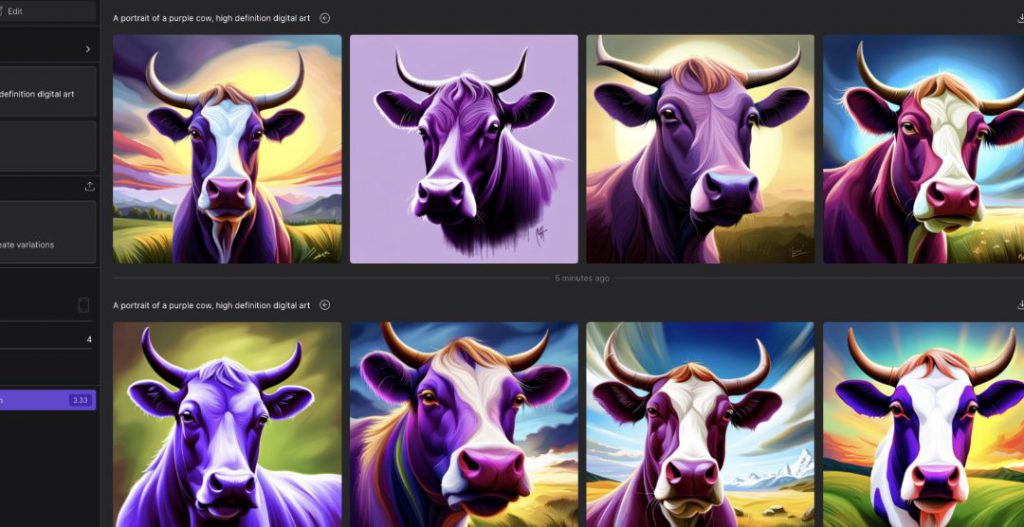

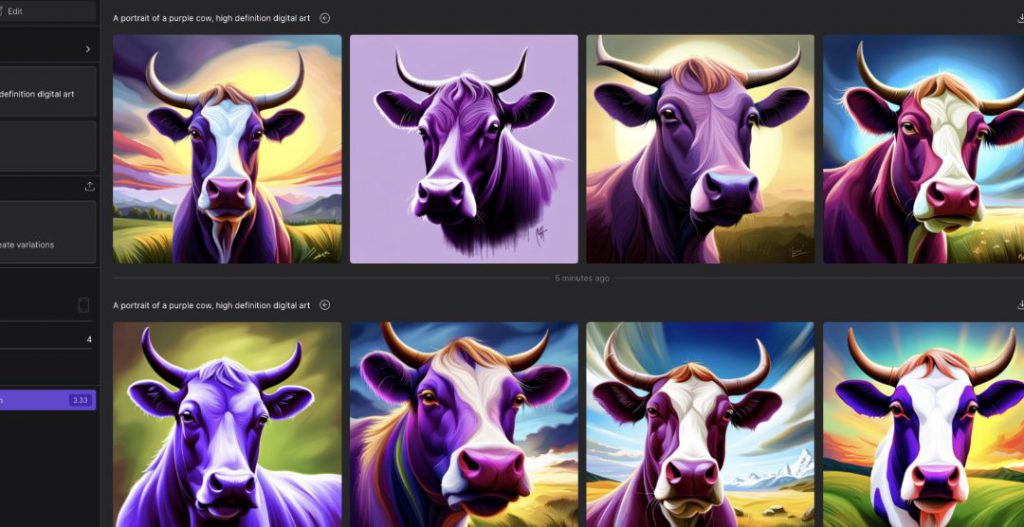

The Crescendo: Generating Images with Trained Stable Diffusion Models

As your Stable Diffusion Model completes its training journey, you stand at the threshold of a realm where machine learning and creativity converge.

With a finely tuned model, you can now generate images that resonate with the artistic essence of your training dataset.

The Epilogue: Your Journey as a Stable Diffusion Maestro

Training Stable Diffusion Models is a dynamic journey that demands a fusion of technical expertise and creative intuition.

Embrace the learning process, experiment with different approaches, and revel in the evolution of your model into an artist in its own right.

In conclusion, the realm of Stable Diffusion Models opens up unprecedented possibilities at the intersection of machine learning and artistic expression.

Armed with this guide, embark on your journey to master the training of Stable Diffusion Models and unleash the creative potential within image generation.